AI Native Product Development Workshop at NY Tech Week

SECTION 1: A New Era for Builders

AI-native is the fastest product shift in history

Cursor hit $100M in ARR in under 18 months—faster than any SaaS product we’ve seen. ChatGPT reached 800M weekly users in under 2 years. AI-native is not emerging—it has already arrived, and it's scaling faster than any previous platform shift.

Every team has access to frontier tech

Thanks to open APIs, anyone can now tap into world-class models. You don’t need a PhD or GPU cluster—just a real user problem and product vision.

Entire categories of product are suddenly viable

From personal assistants to adaptive UIs, many ideas were once impossible due to cost or complexity. Now, with AI-native design, they’re within reach—even for small teams.

You can build what only big companies used to do

What once required massive engineering teams—speech, vision, search, personalization—you can now build with APIs, scaffolding, and smart loops.

The limiting factor is no longer compute—it’s product thinking

The infrastructure is accessible. The bottleneck is knowing what to build, how to structure learning, and how to earn user trust in probabilistic systems.

Most companies won’t get this right. You can.

Shipping AI-native means more than adding LLMs. It means building feedback systems, trust scaffolds, and adaptability. Few will. You can.

If you’re early, you get to define the pattern

The frameworks, UX patterns, and team habits of AI-native work are still emerging. Your builds today can shape the standards others follow tomorrow.

We are not here to add AI. We’re here to rethink software

This workshop is about building products around AI—not injecting it into old workflows.

SECTION 2: Reality Check

Vibe Code ≠ Real Code

Fast demos get attention—but fragile code won’t survive real users, edge cases, or production demands. Engineering discipline still matters.

Juniors alone Can’t Ship AI Products

This stack isn’t just about clever prompts. It requires infrastructure judgment, trust thinking, and hard tradeoff calls—things you only learn by doing.

Most AI Products Fail on Trust, Not Tech

Users don’t churn because the model hallucinated once—they leave because they don’t understand what it will do next. Trust is the true failure point.

Welcome Back to Constraints: Tokens, Latency, Limits

AI infra looks magical—until rate limits hit, latency spikes, and token windows overflow. You need to design with cost and throughput in mind.

Your AI Might Work. Until It Doesn’t.

LLMs are non-deterministic. Success today doesn’t guarantee tomorrow. If you don’t plan for change, you’re always one prompt away from chaos.

Prompts Decay, Evals compound.

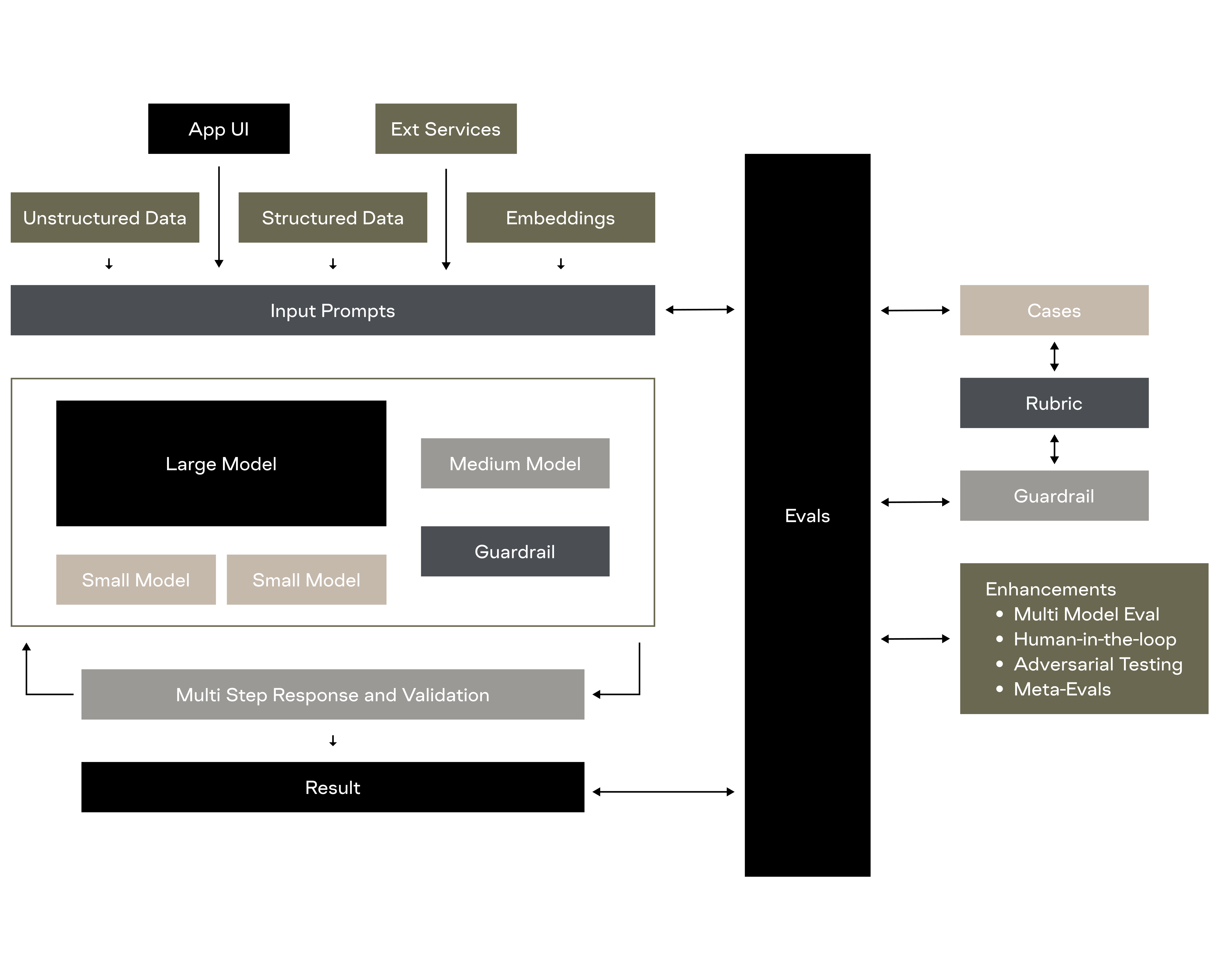

Your prompt is brittle—it will change, break, or drift. What matters is whether your eval system catches that before your users do.

SaaS Users Don’t Want to Learn Prompting

Prompt literacy isn’t a prerequisite for good UX. You’re not building for AI experts—you’re building for busy, skeptical humans.

Thin Wrappers Die Fast

A product that’s just a prompt with a UI doesn’t last. Defensibility comes from workflows, context, and trust—not just access to the model.

SECTION 3: Foundational Principles

Prompt Debt Is the New Tech Debt

Tweak too many things without versioning or evals, and your product becomes unmaintainable. Fast prompt work needs structure to scale.

No One Pays for a Demo

Demos get attention. But if it breaks under real load—or doesn’t earn daily use—it’s not a product, it’s a prototype with PR.

AI-Native Means Redesigning the Team, Not Just the Tech

Shipping AI-native features requires new roles, new habits, and new decision-making. Your team’s mindset is part of the stack.

Right-Size Your Stack: Avoid Over and Under-building

If you underbuild, you’ll be rewriting everything later. If you overbuild, you’ll lose speed and flexibility. AI-native needs tight scaffolding, not excess.

Modality Check: Not Every Medium Is Ready

Text is reliable. Images, 3D, and voice are still early. Before you integrate, ask: will this make the product better—or just newer?

Where Vibe Code Belongs in Production

Some parts of your stack can be scrappy. Internal tools, prototyping loops, and agent scaffolding? Fine. But customer-facing systems need discipline.

Smart UX Isn’t Frictionless. It’s Contextual.

AI-native UX doesn’t mean fewer steps. It means better ones—designed to reduce uncertainty and invite trust.

SECTION 4: LIFFT Framework

The 5 Shifts That Saved Our Product

These weren’t academic choices—they were survival moves. Each shift helped us turn chaos into a system we could build on.

LEARNING:

Feedback Loops > Feature Lists

Your most important feature is the loop that tells you what’s working, what’s drifting, and what’s next.

INFRA:

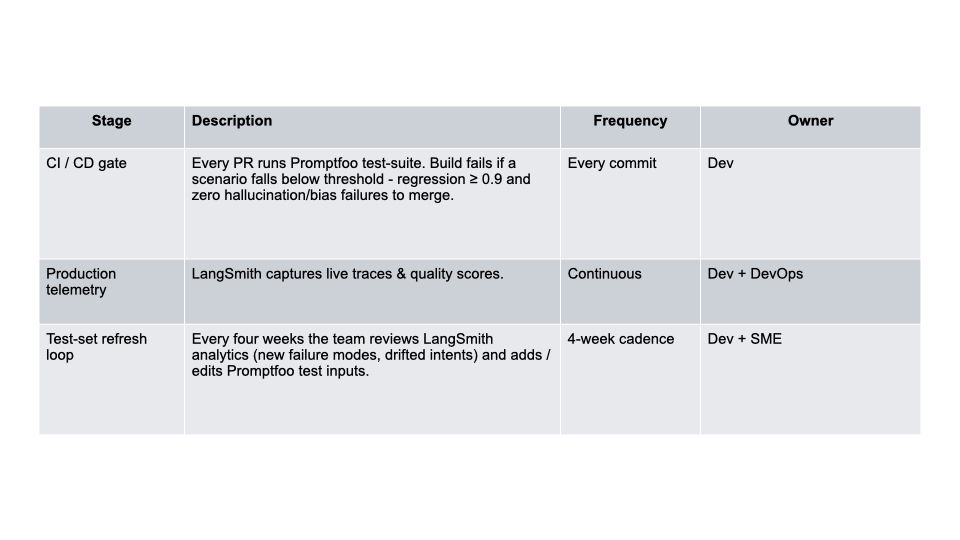

You Need Ops for Prompts

Prompt versioning, testing, rollback, eval dashboards—without these, you’re flying blind.

FOUNDATION:

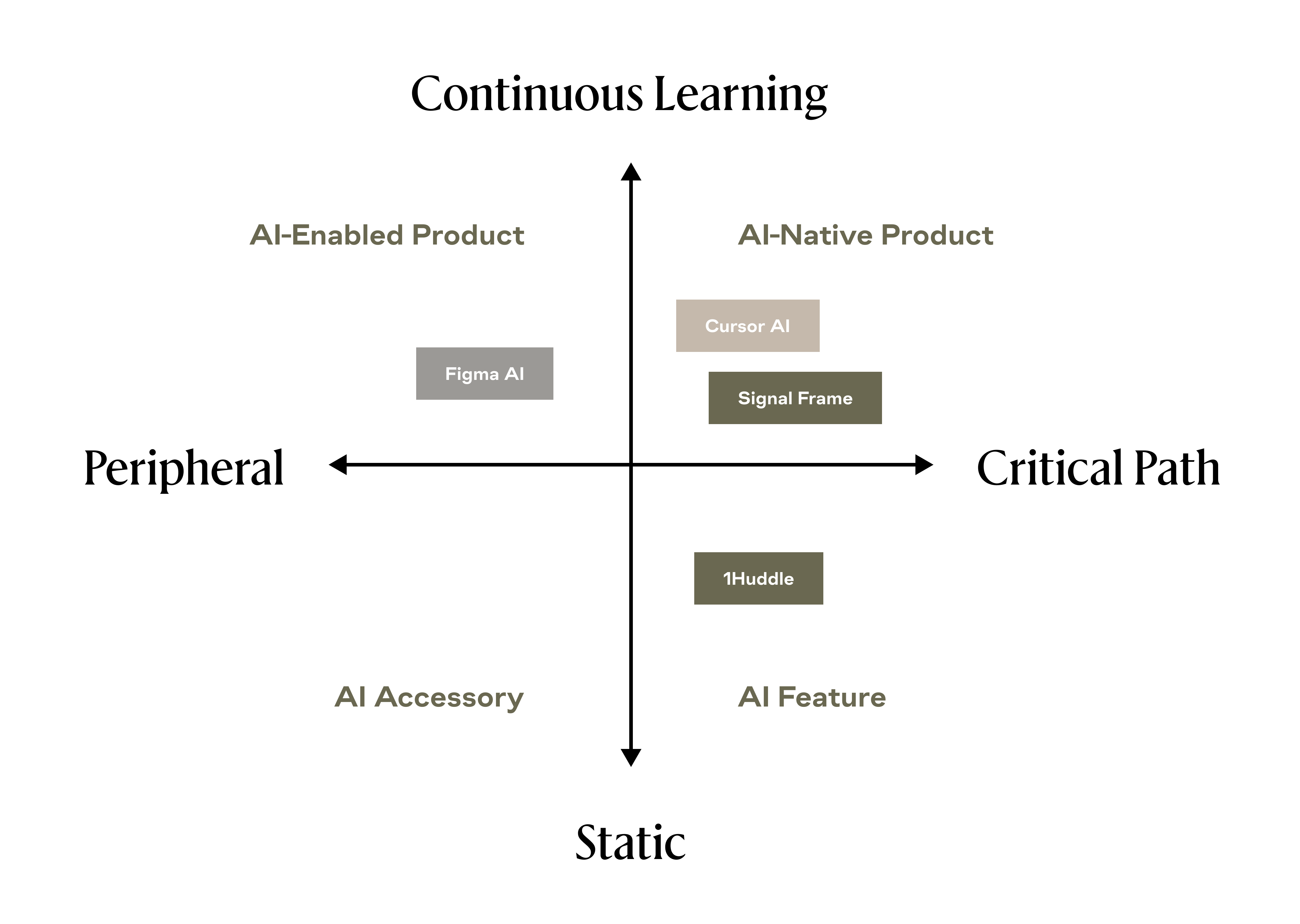

If AI is Not Core, It’s Cosmetic

If you can remove the AI and the product still works, it’s not AI-native. The AI should shape experience, not decorate it.

FLOW:

Teams That Shape Behavior, Not Just Ship Features

PMs own prompt behavior. Designers shape tone and trust. QA handles chaos. Your org chart is part of your product.

TRUST:

Confidence You Can Measure

Trust is not assumed—it’s earned through feedback, editability, reliability, and clear system behavior.

SECTION 5: What’s Hard

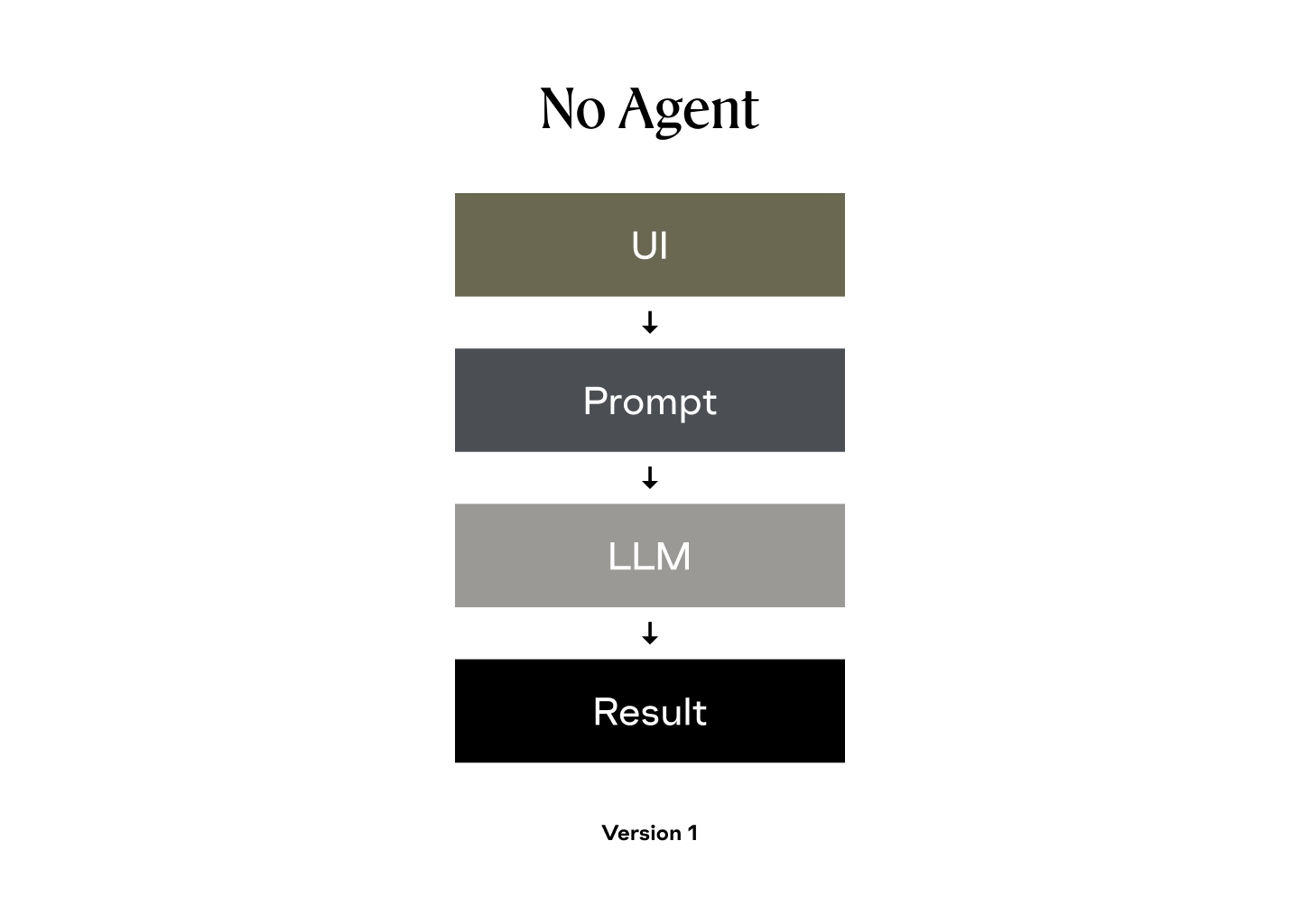

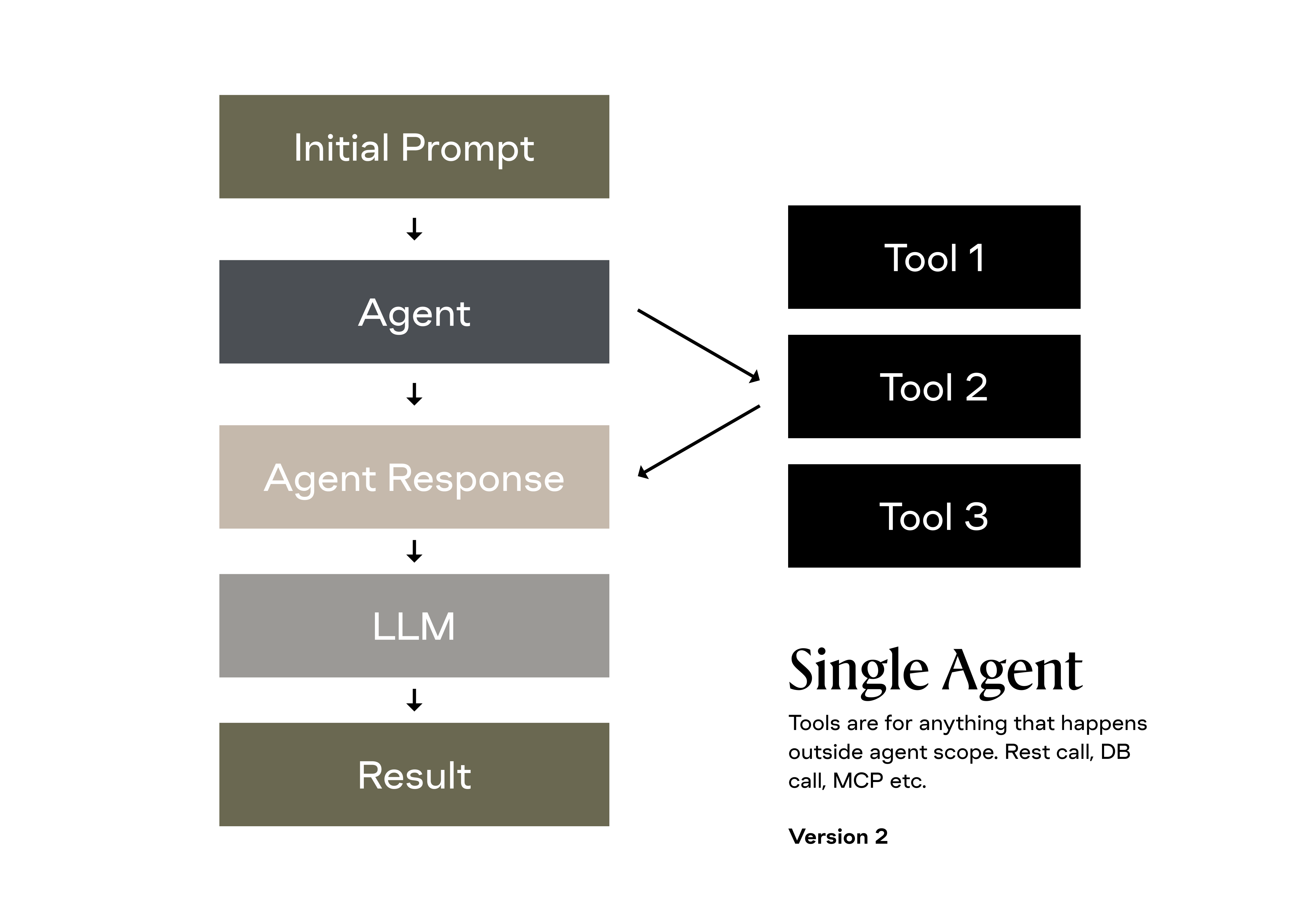

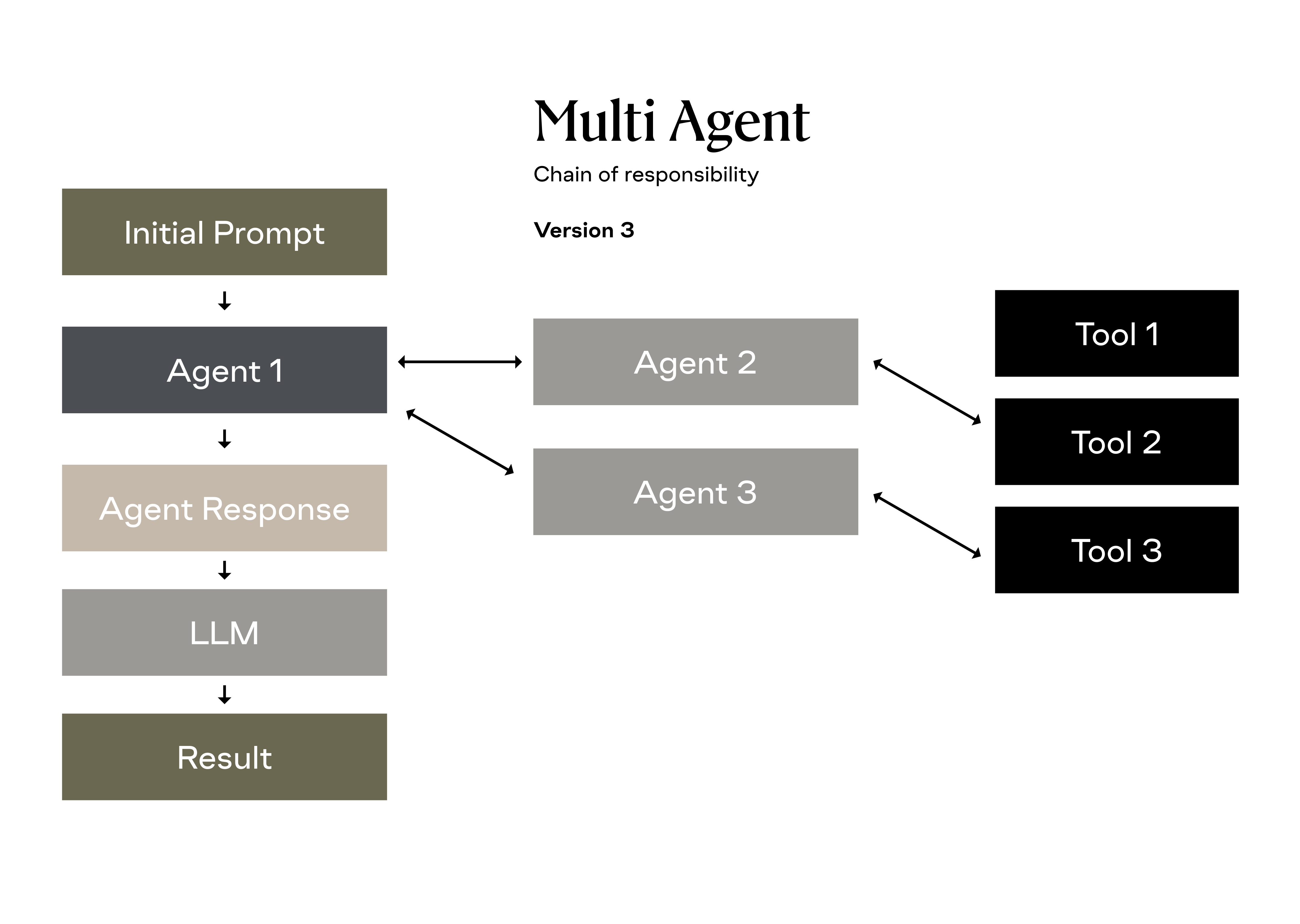

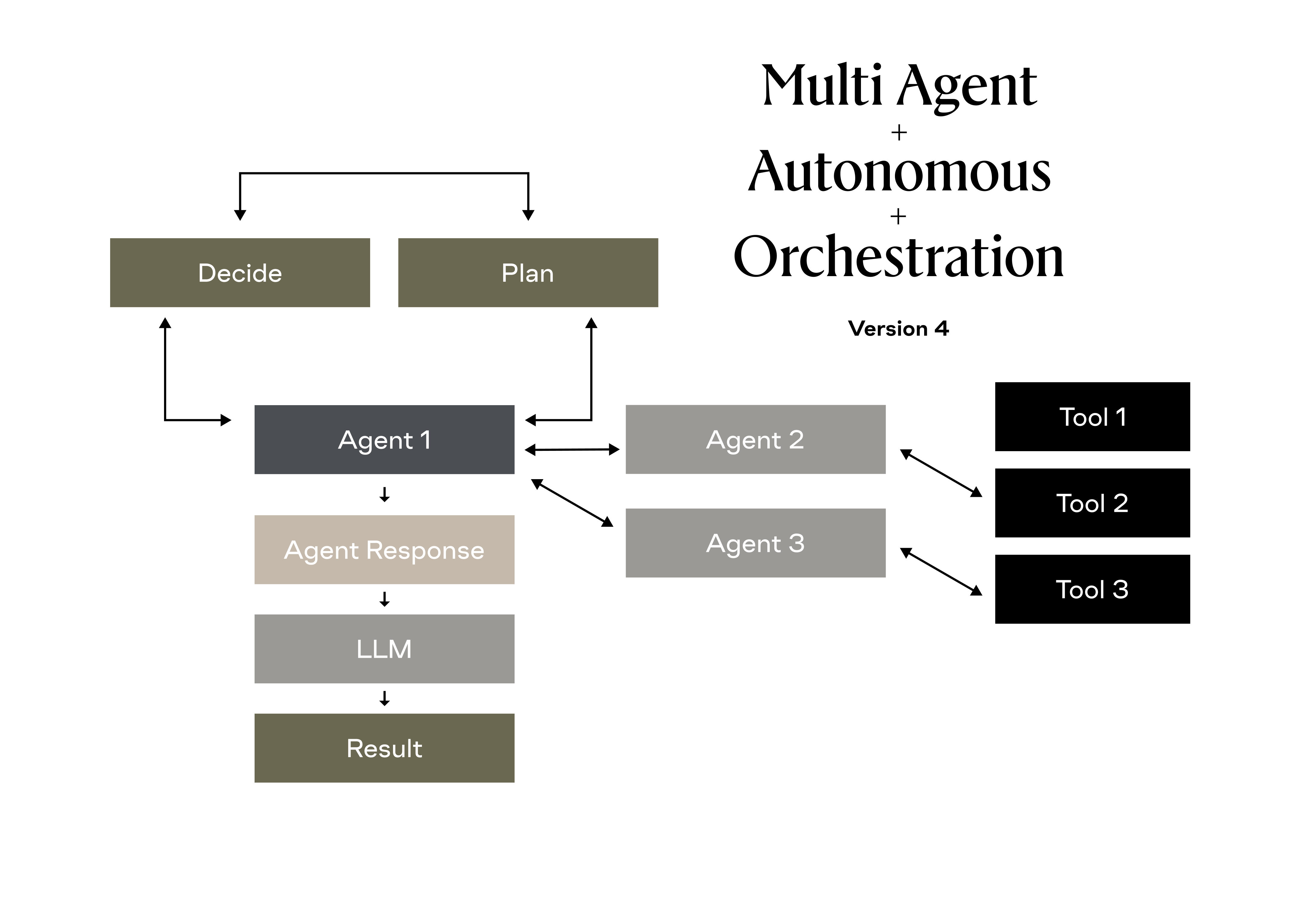

From Prompt to Agent: The Fullstack Sequence

In AI-native systems, prompts evolve into agents—and agents become the new backend. You’re not just replacing UI—you’re rearchitecting your logic layer through orchestration.

Prompts Rot. Evals Compound.

Prompts are temporary logic. Evals are your safety net and strategy—they help you test, learn, and avoid regression. Your tests are what scale, not your cleverest prompt.

Your Stack = Learning Loops × Cheap Shots

Fast iterations are good. Learning loops make them valuable. Together, they let you adapt faster than your competitors can react.

PromptOps Is Still a Black Art

Everyone’s doing prompt work, but few are doing it observably, versioned, or reproducibly. It’s still more art than ops—at your risk.

Evals Are the Work — Not the Afterthought

If you aren’t testing for consistency, regressions, and drift, you’re not shipping—you’re gambling.

Tooling Is Primitive. Your Team Has to Be Elite.

You won’t find off-the-shelf maturity. You’ll need sharp judgment, shared language, and systems thinking to stay ahead.

ChatGPT UX ≠ Product UX

One input box isn’t a product. AI-native UX must guide ambiguity, manage trust, and support recovery.

Some Friction Is Healthy

Friction is not failure—it’s design. When used well, it earns trust, prevents errors, and clarifies logic.

No Rollbacks? No Trust.

You need to treat prompt changes like code. If you can’t safely revert a broken update, your product isn’t ready.

You’ll Always Be Running Two Versions

There is no single production prompt. You need test branches, staged releases, and fallbacks—because AI doesn't fail predictably.

AI Infra Isn’t Cheap — It’s Just Hidden

Latency, token usage, retries, hallucinations—they all carry cost. Design for efficiency or pay for it later.

Tech Debt Breaks AI Products Fast

Without clean structure and eval coverage, small changes ripple into system instability. You won’t feel it—until you do.

“Ship Fast” Only Works If You Can Learn Fast

Speed is only safe if it’s attached to insight. Otherwise, you’re shipping chaos faster.

SECTION 6: But it’s possible

You Don’t Have to Solve Everything Day One

The goal isn’t to master the entire stack. The goal is to build one loop that works—and learn from it.

Know Your Axes:

- Stage

- Use Case

- Risk

What you build depends on what you’re building for. Context should drive your architecture, not trends.

Start with Prompts. Build the System Around Them.

Your prompt is the behavior layer. Your evals, infra, and UX should orbit around it.

SECTION 7: Closing & Reflection

Vibe Code , works fast – doesn’t scale

Use it to learn fast, not to scale. Don’t confuse working once with working reliably.

Ask Yourself: What’s Your Real Foundation?

Is your product still valuable if the model changes? If not, you don’t have a moat—you have a wrapper.

Where Are You Most Fragile? Trust, Infra, or Flow?

Every team has a weak link. Find yours early and reinforce it—before the friction hits your users.

What’s Working Today Might Be off Tomorrow

Prompt drift, dependency shifts, silent failures—AI-native products age differently. You need to check what you can’t see.

You’re Not Behind — You’re Early

The stack is immature. Best practices are forming. If you're in this room, you're ahead.

This Work Is Hard. But It’s Worth Doing

Building AI-native is messy. But it lets you build smarter systems, more ambitious UX, and real user leverage.

Don’t Wait for Perfect. Just Start Smart

You don’t need to solve for scale on Day 1. Just don’t trap yourself with shortcuts you can’t unwind.

Let’s Go Build What Only You Can Build

Your unique insight is your differentiator—not your model, your prompt, or your tooling. Build from there.